So in some versions of my installation, I have to have multiple sensors routed to the same lights (because either room size or budget size is a consideration). This will usually mean 2 sets of 2 sensors controlling 1 light. I think that means using beam.join in merge mode/weighted average. (Beam.join 3 in this case)

But I can’t see from the help file what the tag that I need to add to the fixtures file should actually be and what its vaue should be. Once I’ve done that, I then set the actual mix using an @mix attribute in the object? [beam.join 3 @mix 0.8 0.8 0.8] ???

First bit of my Fixtures file…

{

“fixtures” : [ {

“channel” : 1,

“children” : [ ],

“id” : “A1”,

“name” : “A1”,

“ranges” : [ ],

“tags” : [ {

“index” : 60,

“name” : “Grset"

SOMETHING GOES HERE? BUT WHAT?

}

],

“testOn” : 0,

“type” : “Generic RGB”,

“universe” : 1

}

,

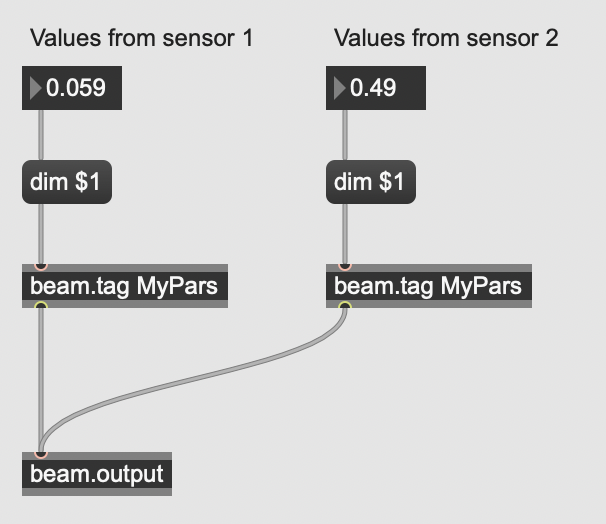

The most basic way to control the same light from two or more sources is to use multiple [beam.output] objects or connect multiple patch coords to the same inlet. This will merge the two signals using the different merge modes as defined in the light’s fixture profile.

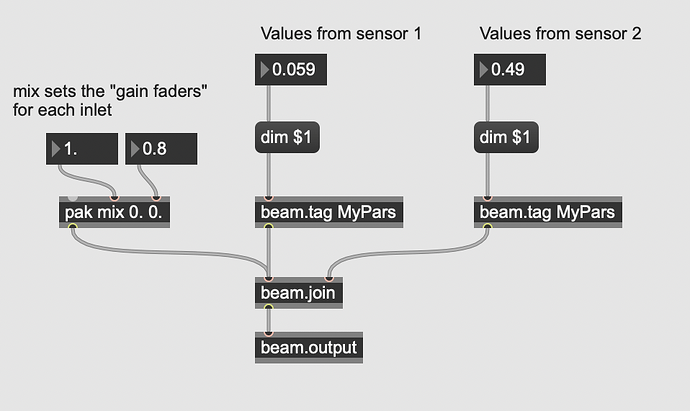

You can also use [beam.join] which gives you a bit more control - it mainly gives you the mix message which allows you to set in what amount each inlet participates in the mixing. Kinda like a volume fader in a DAW. In the situation where you have two signals, the mix 1. 1. message will have the same result as connecting the two patch coords to the same inlet. mix 1. 0. will only pass the signal from the left-most inlet.

The argument (3) you see in the help files sets how many inlets the object has. Inspired by Max’s [join] object.

What is important to note is that the merge mode is not defined by the [beam.join] object. Instead, this is defined by the light’s fixture profile. If you take a look at one of the .sbf files in the package (e.g., Generic RGB.sbf) you’ll see a section like this:

"modulation": {

"blue": {

"attribute": "Blue",

"mergeMode": "addition"

},

"green": {

"attribute": "Green",

"mergeMode": "addition"

},

"red": {

"attribute": "Red",

"mergeMode": "addition"

}

},

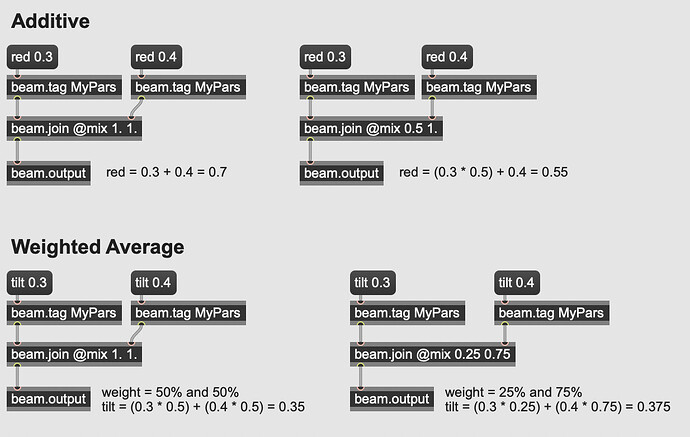

The mergeMode sets how the values for that parameter are merged together. We currently have three: addition, weightedaverage and htp. In the Generic RGB fixture all colors are simply added together. Lights like moving heads usually use weightedaverage for parameters like tilt and pan. htp is mostly used for parameters like Color wheels and Gobos. The [beam.join] help file contains some tabs that have interactive examples of how those modes work.

Addition and weightedaverage are probably the most relevant for you. In combination with [beam.join] they work like this:

Addition: the values are added together. The value is first multiplied with the value from the mix message.

Weighted average: the values are averaged and the weights are defined by the mix message.

mix 1. 1.means that both signals contribute equally resulting in even averaging ((a * 0.5) + (b * 0.5))mix 1. 0.means that only signal A contributes ((a * 1.0) + (b * 0.0)).mix 2. 1.means that signal A contributes for 66% (2.0 / (2.0 + 1.0)) and signal B contributes for 33% (1.0 / (2.0 + 1.0)). So the final calculation is ((a * 0.66) + (b * 0.33)).

Here’s a screenshot of a patch that might explain better by showing.

If you just want to merge colors for colored LED pars, the addition mode used by Generic RGB should be fine. If you want to customize this, you can duplicate the .sbf file and change the merge mode manually.

Hope this helps!

To clarify one more thing: we have two types of JSON files - the fixture profile (.sbf) and the patch file (.sbp). Beam for Max saves the patch file as .json.

The fixture profile contains information about a specific light. It allows Beam to know how to control a light - e.g., which DMX addresses to use, and whether the light is an RGB or CMY light. This file contains the mergeMode information I talked about above. We want to ship with many fixture profiles and sensible defaults, so you probably only have to touch them if you want to customize something, or when you have a custom light.

The patch file contains information about what lights you use, to what universes and addresses they are patched, and which lights are part of which tags. These change depending on your lighting setup.

The snipped you shared in your initial post is from a patch file, so these don’t contain the merge mode information you’re interested in. If you want to customize the mergeMode, you’ll have to make sure to change a fixture profile!

If it turns out that customizing merge modes is a common use-case, we can consider adding a way to override the mergeMode from the patch file/editor. Similar to how we have ranges.

ok,I think I got most of that (I’ll go back over it in a minute)

At the moment, if I just join the signals, I end up with lots of white, which is why I thought weighted average would be best. So you’re saying that I need to make a slight edit to the Generic RGB file? Change addition to weightedaverage? and then probably @mix 1. 1. in the beam.join object box? Then if only one _or the other sensor is being played, I’ll get the colour changes that that sensor is actually sending, but if _both are played, I’ll get a 50/50 mix of the 2 colour signals. That sounds like what I want.

By the way - in the other thread, I cna’t send you the .maxpat as I’m a new user and thus, barred from quite a few things!

I’d be curious to know a bit more about how you’re using your patch and what color mixing you try to achieve. Perhaps we can schedule a zoom call to do a bit of screen sharing? If you’re up for it, I’ll reach out via email together with @Luka

Sure, no problem. Though the (software) colour mixing aspect is more a question of necessity than of art!

Basically, my full workshop system has 14 lights - 12 for the sensors and 2 that sit behind the lycra sails that turn on when no-one is playing, so that the room is never (or only momentarily) completely dark.

The 12 lights are broken into 4 banks of 3; in each bank 2 lights are controlled by one of the laser ditance sensors (played mostly by hand movements), and the other lights are controlled by 2 touch sensitive boards, 1 pressure (floor) board, and microphone level.

The movement controlled lights are easy. Although I allow for the user to set any of red, green or blue on each light of a pair separately, they are generally set to pair 1 - red, pair 2 - green, pair 3 - blue, pair 4 - red and green (as i say in workshops, nature gave us three primary colours of light, but 4 members in a band). (The option to change the colours contolled is because sometimes in a school installation, teachers might want all of a single colour because that’s what the child likes. )

The boards have “keys” - either 12, 8 or 6 (the floor pressure board) - where the varying signal from each key triggers a note on when it passes a threshold set in the software. So in the past I’ve mapped those note on/offs to different fixed secondary and primary colours using a [coll] to do the mapping. The Beam version is probably going to do something similar, but I haven’t looked at that bit yet.

And why necessity? Most schools that I’ve put permanent installations in have room size and budget constraints. I’ve put in systems with 4 lights (very small room), 8, and these days mostly 10 if there’s space. With fewer lights, I generally have 1 or 2 for the movement sensors, which means 2 lights left to cover any touch/floor boards, and the “lights on if no-one’s playing” function. The latter I handle with switching, but there are still 2 devices to one light when someone _is playing (eg touchboard 1 & floor sensor on light A, and touch board 2 and mic on light B) which is where the need to mix signals together comes in.

In my patch, I’ve used a beam.join 3 - 2 inputs to mix the two possible controls for each of the single lights, and the third just to add in (rather than mix, they’re on their own channels) the movement sensor stream.

The other part of the app, which I tend to forget about because it’s fairly new (and slightly underdeveloped) is a kind of environmental ambience generator, which is something one school asked for (I don’t use it in workshops). The user chooses an environment (eg rainforest) and a mix of short and long sound loops are played, while a slighty randomised light sequencer fades different colours (primary and secondary) up and down (the colours relate looslely to the environment chosen). This is probably something that would benefit from a rethink, using Beam. (Though it wouldn’t go into legacy systems as I can’t really ask schools with existing installations to cough up more money!)