I was able to get the lights to react to the audio, but it was not in a way that I found attractive or anything close to what I’m seeing in my head which is quite intricate, generative and crystalline.

I suggest sharing some concrete video examples of what you have in mind, as well as any examples of failed attempts, maybe we can help.

The main challenge for me is that i want to control 20-30 moving head lights, each of which has 24-30 dmx channels, so each channel would be a controller line in Ableton, and I want the parameters changing uniquely and in waves.

Drawing a separate automation lane for every DMX channel is definitely not the way we intend Beam for Live to be used, that would be very painful

The number of DMX channels your fixtures have is not reflected in Live - you work with named modulations (e.g. pan, tilt, stroberate) and the tag-based approach means you don’t need an individual automation lane for every fixture in your patch unless you specifically want that. As mentioned in my previous post, you can use Generic’s ability to spread its params across groups of lights within a tag (e.g. if you have 128 lights and 4 params using the same modulation, each param will control 32 lights), Beam’s LFO Spread parameter or use MIDI notes.

The Beam for Live 2’s Demo Project, which you can access via Beam > Open Demo Project… and are probably already familiar with demonstrates some of that, but a good example is also the Beam for Live 1’s Demo Project, which you can run by going to Beam > Open Playground… and then running the User Library/Beam/2.0 Playground Project/Beam Playground Example.als.

I also did a bit of research and found that most fully featured lighting softwares come with effects engines that do all this stuff for you. I don’t want to spend my life drawing controller lines on automation tracks in Ableton. I’m also really keen to have a team working on this with me so it’s not just me doing the programming And I think if I stay within Beam I will end up being the key person after all.

Beam say that you can unpack their apps which are built in Max for Live and reprogram them, but I’m not a programmer, I’m not at the stage in my life where I want to develop that expertise.

There are several simple ways to create rich lighting effects that don’t require any programming knowledge. Beam for Live’s sequencing and effect engine is Ableton Live - think of all the many ways you can generate musical material and apply this mindset to lighting.

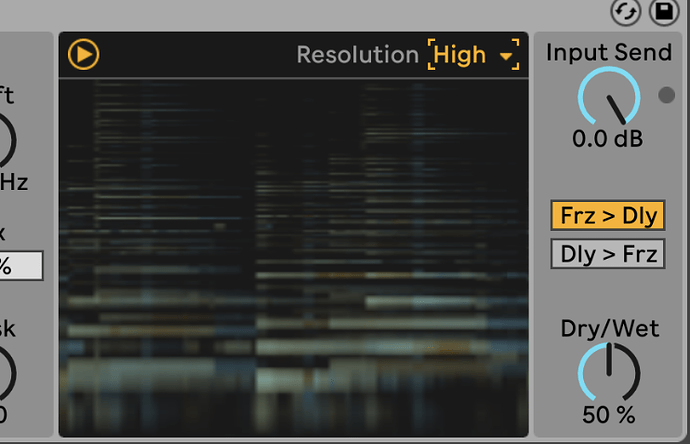

Besides controlling modulation parameters with automation (which you definitely don’t have to draw for every individual DMX channel or modulation - you probably also don’t individually automate every parameter of every individual voice of a polyphonic synth), you can also use Modulators (besides Envelope Follower also e.g. Expression Control, Shaper, or a number of third-party M4L devices), or Beam’s own LFO device.

You can trigger envelopes for lights using MIDI notes, which you can manually enter, play and record with an external MIDI instrument, use Live’s MIDI Effects (e.g. Arpeggiator, Random, Chord), MIDI Generators (built-in ones & third-party such as MIDI Tools) and M4L Sequencers.

You can mix and merge lighting signals generated using the different approaches using Racks and Group Tracks, and apply further lighting processing on that (e.g. by a lighting operator that can control group effects via MIDI or OSC).

You can even combine approaches and bring in lighting signals from a lighting console or another lighting software and process that with Beam and Live. As any tool, there are of course also things it cannot do, and creative situations where you are better off using another tool.

That being said, a lot of the above is probably not fully obvious to a lot of users - we definitely recognize the need for more examples and tutorials. Also, I can see how having some lighting presets (aka. “chases”) you can just drag-and-drop as a starting point could be helpful, as a folder of classic drum breaks or even a sacrilegious MIDI chord progression pack can sometimes be helpful. Added to the to-do list & thanks for the feedback!